JointSampler tutorial¶

In this tutorial, we will show you how easily it is to use the JointSampler provided in SemiPy. This sampler is ideal for Semi-Supervised Learning tasks, as it is creating batches of both labelled and unlabelled items, with respect to user-specified parameters such as 'batch_size' and 'proportion'. The parameter 'proportion' corresponds to the proportion of labelled items that should be included in each batch. For example, if the user choose a proportion of 0.3, then 30% of the batches items will be labelled.

import semipy as smp

import numpy as np

from torch.utils.data import DataLoader

To make a really simple example, let's use fake tabular data in order to really understand how the JointSampler works. The most important point to respect in order to make the sampler work properly is the data itself: unlabelled data should be labelled as -1. This is the only constraint.

Let's use a 3-class dataset of length 20 with items from number 7 to 20 unlabelled (with label -1) :

data = np.array([

(1, 1),

(2, 1),

(3, 1),

(4, 2),

(5, 3),

(6, 3),

(7, -1),

(8, -1),

(9, -1),

(10, -1),

(11, -1),

(12, -1),

(13, -1),

(14, -1),

(15, -1),

(16, -1),

(17, -1),

(18, -1),

(19, -1),

(20, -1)

])

Using SSLDataset class from SemiPy to convert the numpy array into a 'callable' dataset:

dataset = smp.datasets.SSLDataset(data)

Now we can easily 'call' any item in the dataset, for example:

dataset[0]

(1, 1)

Let's define our sampler. As it is considered as a 'batch_sampler', it is here that we choose our parameters such as batch size and proportion.

sampler = smp.sampler.JointSampler(dataset=dataset, batch_size=4, proportion=0.5)

Now in order to simulate some learnin epochs, let's use the sampler in a torch DataLoader:

dataloader = DataLoader(dataset, batch_sampler=sampler)

We chose a batch size of 4 and a proportion of 0.5 so we expect to have at each epoch 4 items with 2 labelled and 2 unlabelled. Let's see:

# We simulate 5 epochs

for epoch in range(5):

print(f'----------Epoch {epoch}----------')

for x, y in dataloader:

print(x)

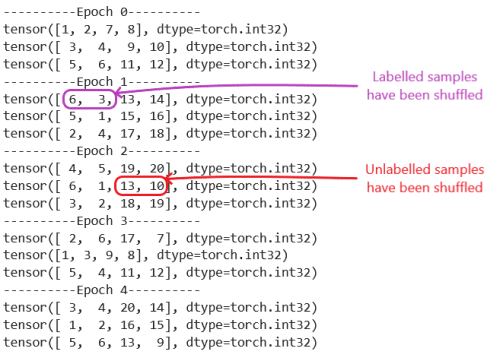

----------Epoch 0---------- tensor([1, 2, 7, 8], dtype=torch.int32) tensor([ 3, 4, 9, 10], dtype=torch.int32) tensor([ 5, 6, 11, 12], dtype=torch.int32) ----------Epoch 1---------- tensor([ 3, 5, 13, 14], dtype=torch.int32) tensor([ 4, 6, 15, 16], dtype=torch.int32) tensor([ 1, 2, 17, 18], dtype=torch.int32) ----------Epoch 2---------- tensor([ 1, 3, 19, 20], dtype=torch.int32) tensor([ 5, 2, 17, 13], dtype=torch.int32) tensor([ 6, 4, 10, 8], dtype=torch.int32) ----------Epoch 3---------- tensor([ 5, 2, 14, 16], dtype=torch.int32) tensor([ 6, 1, 18, 9], dtype=torch.int32) tensor([ 3, 4, 20, 12], dtype=torch.int32) ----------Epoch 4---------- tensor([ 6, 1, 19, 11], dtype=torch.int32) tensor([ 3, 5, 15, 7], dtype=torch.int32) tensor([ 4, 2, 14, 11], dtype=torch.int32)

As we can see, each epoch is divided in 3 batches. In each batch we correctly have a proportion of 0.5 labelled samples. We also correctly see every labelled item at each epoch. As there is much more unlabelled samples than labelled ones, we can't see them all in one peoch so the sampler keeps in memory where it stopped sampling unlabelled items at every last batch of an epoch and continues to sample the next unseen unlabelled samples in the next epoch.

Note than when every sample of one subset (labelled or unlabelled) have been seen, those are shuffled (as we can see after the first epoch with labelled items, or after the first batch of Epoch 2 with unlabelled items).

Another thing to notice is about proportion. If we had set 'proportion=0.6', than we would expect 4*0.6=2.4 labelled samples per batch, which would be rounded to 2. It would not change the behavior of the sampler. But if we chose 'proportion=0.65', than we woudl expect 4*0.65=2.6 labelled samples per batch, which would be rounded to 3. Let's see if this if true:

# We first try with proportion=0.6

sampler = smp.sampler.JointSampler(dataset=dataset, batch_size=4, proportion=0.6)

dataloader = DataLoader(dataset, batch_sampler=sampler)

# We simulate 5 epochs

for epoch in range(5):

print(f'----------Epoch {epoch}----------')

for x, y in dataloader:

print(x)

----------Epoch 0---------- tensor([1, 2, 7, 8], dtype=torch.int32) tensor([ 3, 4, 9, 10], dtype=torch.int32) tensor([ 5, 6, 11, 12], dtype=torch.int32) ----------Epoch 1---------- tensor([ 4, 1, 13, 14], dtype=torch.int32) tensor([ 5, 2, 15, 16], dtype=torch.int32) tensor([ 3, 6, 17, 18], dtype=torch.int32) ----------Epoch 2---------- tensor([ 4, 5, 19, 20], dtype=torch.int32) tensor([ 2, 6, 16, 14], dtype=torch.int32) tensor([ 1, 3, 9, 15], dtype=torch.int32) ----------Epoch 3---------- tensor([ 5, 1, 10, 18], dtype=torch.int32) tensor([ 3, 4, 20, 19], dtype=torch.int32) tensor([ 2, 6, 7, 12], dtype=torch.int32) ----------Epoch 4---------- tensor([ 5, 3, 11, 17], dtype=torch.int32) tensor([ 2, 6, 8, 13], dtype=torch.int32) tensor([ 1, 4, 14, 15], dtype=torch.int32)

As we can see it didn't change anything. But if we try with proportion=0.65, we obtain only two batches per epoch because we now have 3 labelled items per batch.

# We first try with proportion=0.6

sampler = smp.sampler.JointSampler(dataset=dataset, batch_size=4, proportion=0.65)

dataloader = DataLoader(dataset, batch_sampler=sampler)

# We simulate 5 epochs

for epoch in range(5):

print(f'----------Epoch {epoch}----------')

for x, y in dataloader:

print(x)

----------Epoch 0---------- tensor([1, 2, 3, 7], dtype=torch.int32) tensor([4, 5, 6, 8], dtype=torch.int32) ----------Epoch 1---------- tensor([3, 2, 5, 9], dtype=torch.int32) tensor([ 6, 4, 1, 10], dtype=torch.int32) ----------Epoch 2---------- tensor([ 4, 2, 5, 11], dtype=torch.int32) tensor([ 6, 1, 3, 12], dtype=torch.int32) ----------Epoch 3---------- tensor([ 6, 2, 5, 13], dtype=torch.int32) tensor([ 1, 3, 4, 14], dtype=torch.int32) ----------Epoch 4---------- tensor([ 4, 1, 5, 15], dtype=torch.int32) tensor([ 2, 6, 3, 16], dtype=torch.int32)

Finally, it is good to know that the sampler is also capable to performs full batches of labelled or unlabelled items. Simply adjust the proportion.

# Compelte case (full labelled)

sampler = smp.sampler.JointSampler(dataset=dataset, batch_size=4, proportion=1.0)

dataloader = DataLoader(dataset, batch_sampler=sampler)

# We simulate 5 epochs

for epoch in range(5):

print(f'----------Epoch {epoch}----------')

for x, y in dataloader:

print(x)

----------Epoch 0---------- tensor([1, 2, 3, 4], dtype=torch.int32) tensor([5, 6], dtype=torch.int32) ----------Epoch 1---------- tensor([5, 3, 4, 2], dtype=torch.int32) tensor([6, 1], dtype=torch.int32) ----------Epoch 2---------- tensor([2, 5, 4, 6], dtype=torch.int32) tensor([3, 1], dtype=torch.int32) ----------Epoch 3---------- tensor([4, 5, 2, 3], dtype=torch.int32) tensor([1, 6], dtype=torch.int32) ----------Epoch 4---------- tensor([4, 3, 6, 5], dtype=torch.int32) tensor([2, 1], dtype=torch.int32)

C:\Users\lboiteau\Documents\Demos\semipy\sampler\jointsampler.py:35: UserWarning: Warning : you are in the complete case. All items will be labelledand no unlabelled items will be seen by the model.

warnings.warn(("Warning : you are in the complete case. All items will be labelled"

# Full unlabelled (proportion to 0)

sampler = smp.sampler.JointSampler(dataset=dataset, batch_size=4, proportion=0.0)

dataloader = DataLoader(dataset, batch_sampler=sampler)

# We simulate 5 epochs

for epoch in range(5):

print(f'----------Epoch {epoch}----------')

for x, y in dataloader:

print(x)

----------Epoch 0---------- tensor([ 7, 8, 9, 10], dtype=torch.int32) tensor([11, 12, 13, 14], dtype=torch.int32) tensor([15, 16, 17, 18], dtype=torch.int32) tensor([19, 20], dtype=torch.int32) ----------Epoch 1---------- tensor([16, 13, 10, 15], dtype=torch.int32) tensor([11, 14, 17, 18], dtype=torch.int32) tensor([ 9, 7, 12, 19], dtype=torch.int32) tensor([ 8, 20], dtype=torch.int32) ----------Epoch 2---------- tensor([10, 14, 17, 20], dtype=torch.int32) tensor([ 9, 7, 15, 16], dtype=torch.int32) tensor([18, 8, 11, 12], dtype=torch.int32) tensor([13, 19], dtype=torch.int32) ----------Epoch 3---------- tensor([12, 11, 13, 17], dtype=torch.int32) tensor([ 8, 9, 10, 7], dtype=torch.int32) tensor([15, 16, 19, 20], dtype=torch.int32) tensor([14, 18], dtype=torch.int32) ----------Epoch 4---------- tensor([ 7, 14, 19, 18], dtype=torch.int32) tensor([10, 8, 17, 9], dtype=torch.int32) tensor([12, 20, 13, 16], dtype=torch.int32) tensor([15, 11], dtype=torch.int32)

C:\Users\lboiteau\Documents\Demos\semipy\sampler\jointsampler.py:39: UserWarning: Warning : you chose a proportion of 0. All items will be unlabelledand no labelled items will be seen by the model.

warnings.warn(("Warning : you chose a proportion of 0. All items will be unlabelled"